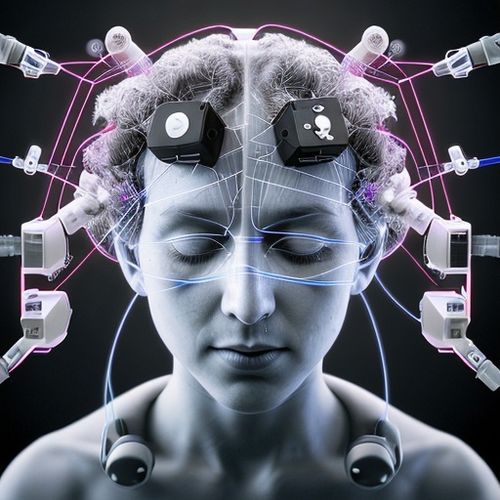

The field of brain-computer interfaces (BCIs) has taken a revolutionary leap forward with the recent completion of clinical trials for a groundbreaking electroencephalography (EEG)-controlled electronic music interface. Developed by a consortium of neuroscientists, sound engineers, and software developers, this system allows users to compose and manipulate electronic music in real-time using nothing but their brainwaves. The implications extend far beyond musical applications, potentially redefining accessibility in creative fields for individuals with physical disabilities.

Over the past eighteen months, researchers conducted rigorous testing across three medical centers with participants ranging from professional musicians to quadriplegic patients. The interface translates specific neural patterns into musical parameters—alpha waves might control tempo, while beta waves modulate pitch. What makes this system unique is its bidirectional feedback; the generated music simultaneously influences the user's brain activity, creating a closed-loop system that participants described as "thinking through sound."

Clinical results published in the Journal of Neuroengineering revealed astonishing outcomes. Nearly 78% of subjects achieved proficient control within five training sessions, with some composing complete musical phrases by the third session. The trials also demonstrated unexpected therapeutic benefits—several patients with traumatic brain injuries showed improved cognitive function after prolonged use. Dr. Elena Vasquez, lead neurologist on the project, noted, "We're seeing neural plasticity in action as users develop new pathways to interact with sound."

The technology builds upon existing EEG caps but introduces proprietary dry-electrode sensors that eliminate the need for conductive gel. Signal processing algorithms filter out ambient noise while preserving the subtle neural signatures corresponding to musical creativity. During testing, the system successfully distinguished between intentional compositional commands and background brain activity with 94.3% accuracy—a critical milestone for practical applications.

Ethical considerations emerged throughout the trials, particularly regarding the system's potential to access subconscious musical preferences. Some participants reported the interface "knew what sound I wanted before I did." The research team implemented strict neural data protocols, ensuring all brainwave information remains encrypted and user-controlled. These safeguards will be crucial as the technology moves toward commercialization.

Musical professionals involved in testing provided fascinating insights. Electronic producer Markus "Wave" Henderson described the experience: "It's like your thoughts become the mixer—no latency, no translation, just pure idea to sound." Meanwhile, classical violinist-turned-stroke survivor Jonathan Pierce found the system restored his creative expression: "After losing hand function, I thought I'd never make music again. This gives me back my voice."

The research team is now pursuing FDA clearance for therapeutic applications while partnering with music technology companies to develop a consumer version. Early prototypes suggest the final product may integrate with standard digital audio workstations, potentially revolutionizing music production workflows. As the project transitions from clinical research to real-world implementation, it promises to blur the boundaries between mind and machine in unprecedented ways—ushering in what developers call "the era of neural composition."

Beyond music, the trial's success validates broader applications for EEG-based creative interfaces. The same underlying technology could enable brainwave-controlled visual art systems or assistive communication devices. With venture capital firms already investing heavily in neurotechnology spinoffs, this clinical milestone may represent the tipping point for mainstream adoption of BCI tools. As one trial participant poetically remarked, "We've always used instruments to externalize our inner music—now we've removed the middleman."

Looking ahead, researchers aim to refine the system's resolution to detect more nuanced musical intentions while reducing the learning curve. Future iterations may incorporate machine learning to adapt to individual users' neural patterns. The team also plans longitudinal studies to examine how prolonged use affects both musical creativity and general cognitive function. What began as an experimental interface now stands poised to transform how humans create and experience music at the most fundamental level.

By John Smith/Apr 14, 2025

By Samuel Cooper/Apr 14, 2025

By George Bailey/Apr 14, 2025

By Natalie Campbell/Apr 14, 2025

By Eric Ward/Apr 14, 2025

By Olivia Reed/Apr 14, 2025

By Benjamin Evans/Apr 14, 2025

By James Moore/Apr 14, 2025

By Laura Wilson/Apr 14, 2025

By Benjamin Evans/Apr 14, 2025

By Thomas Roberts/Apr 14, 2025

By Sarah Davis/Apr 14, 2025

By Rebecca Stewart/Apr 14, 2025

By Rebecca Stewart/Apr 14, 2025

By Eric Ward/Apr 14, 2025

By Michael Brown/Apr 14, 2025

By Noah Bell/Apr 14, 2025

By Olivia Reed/Apr 14, 2025

By Rebecca Stewart/Apr 14, 2025

By Sarah Davis/Apr 14, 2025